If you haven't read my previous post on sequential vs random access, please go to this link

http://technicalpostsbyarifkhan.blogspot.com/2013/12/random-vs-sequential-io-and-effects-on.html before proceeding further on this post.

This post is primarily focused on key look-ups when non clustered index is used to access data using Microsoft SQL Server as a database system.

Here is the key difference between clustered and non-clustered indexes.

Clustered Index: Leaf level pages of a clustered index are data pages (all columns of a row and possibly multiple rows in a page).

Non Clustered Index: Leaf level pages of a non-clustered index has non-clustered index key and a reference to its row in a data page. This reference is a clustered index key if there is a clustered index, otherwise reference to physical location of a row.

I can spend whole lot of time describing clustered and non-clustered indexes, that's not the purpose of this post.

Data consistency

Clustered and non-clustered indexes are two separate physical structures, maintaining consistency among these two separate structures is the key property of RDBMS systems, which is 'C for consistency' in ACID properties.

When data is written to a table, it's the responsibility of RDBMS system to update all indexes to maintain consistency, if update to any index fails, write operation fails and data is rolled back to previous state.

Now let's go to the core issue of this post i.e. key lookups used in SQL Server.

Here is the sample script to simulate key lookups...

CREATE TABLE DBO.TestNCIUsage (ci INT NOT NULL

, c2 int not null

, c3 int not null

, c4 int not null

, c5 varchar(100)

, nci int not null

, unqid uniqueidentifier default (newid()))

--insert sample data

DEClARE @i int =1

,@nciValue int = 2000000

WHILE (@i<2000000)

BEGIN

INSERT INTO DBO.TestNCIUsage(ci

,c2

,c3

,c4

,c5

,nci)

SELECT CAST(RAND()*10000000 AS INT) AS ci --random number

,2000 AS c2

,3000 AS c3

,4000 AS c4

,'SQL SERVER 2012 TESTING' AS c5

,@nciValue

SET @nciValue -=1;

SET @i+=1;

END

--cluster index is on randomly generated value

CREATE CLUSTERED INDEX CIX1 ON DBO.TestNCIUsage(ci)

CREATE NONCLUSTERED INDEX NCI_TestNCIUsage__nci ON TestNCIUsage(nci)

Cluster index on table is on ci and non-clustered index is on nci column.

Table is populated with 2M rows, value for ci is randomly generated and nci is a decreasing value staring from 2M.

1) Key lookups

select nci,c2 from DBO.TestNCIUsage

where nci between 1 and 4888

In the above select statement, columns nci & c2 are selected, nci has non-clustered index, but c2 is NOT in index.

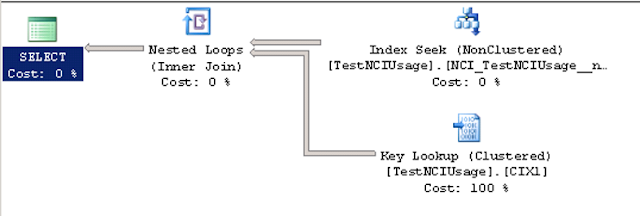

Here is the execution plan for the above query..

If we analyze this plan, two index structures are used to fetch data from disk and nested loops are used to join data.

Data access starts with non-clustered index, gets nci column values between 1 and 4888, each nci value in non-clustered index also has it's corresponding clustered index key (ci) at the leaf level, this clustered index key (ci) is used to traverse thru clustered index's B+ tree and fetches c2 from data pages, this is what is called key lookup.

Here is where previous post on sequential vs random access helps...

Each lookup into clustered index to get c2 may potentially end up with a random read.

This random read is not because of fragmentation, it's because each key look-up has to traverse thru clustered index B+ tree and fetch data from pages that are not logically ordered. Key lookups in the sample select statement may cause 4888 random reads, random reads are the most expensive I/O operations and the cost of each random read is calculated in previous post.

This random read is not because of fragmentation, it's because each key look-up has to traverse thru clustered index B+ tree and fetch data from pages that are not logically ordered. Key lookups in the sample select statement may cause 4888 random reads, random reads are the most expensive I/O operations and the cost of each random read is calculated in previous post.

Now let's change the select statement

2) Clustered index scan

select nci,c2 from DBO.TestNCIUsage

where nci between 1 and 4889

Here is the execution plan when upper bound in the where clause is changed to 4889.

Apparently, SQL Server optimizer has estimated too many random reads when value is changed to 4889 in the where clause, and decided to go for clustered index scan (which is almost similar to table scan).

It turns out, scanning whole 2M rows table sequentially is cheaper than causing 4889 random reads.

Key lookups causes random reads and random reads are the most expensive operations.

Key lookups are fine if you are reading small set of rows, it becomes problematic when you are fetching more data. General threshold seems to be around 5000 rows, more than 5000 tends to make SQL Server to go for clustered index scan, even if the table size is way larger than sample shown in this post. These numbers are approximate values, you need to test with your data.

Usage of non-clustered indexes with key look-ups works fine in transactional systems, where reads are smaller in size. It's an issue with data warehouses where large reads are performed, non-clustered index will be inefficient if the index is not covering all the columns used in the query while you are reading large number of rows.

There are lot of other factors that determines cost of I/O operations, like size of row, number of rows in a table & number of pages. We are not going into that discussion in this post.

There are couple of ways to make non clustered index cover all the selected columns

i) Include all columns as part of non-clustered index key, which is inefficient and may not work in case of unique indexes.

ii) Use SQL Server's INCLUDE clause to make columns as part of leaf level page instead of index key.

Key points from my two posts..

(1) Minimize fragmentation of data.

(2) Minimize operations that cause random reads.

(3) Non-clustered index is inefficient for large reads, if the index is not covering, this will be worse, you are paying cost to update index in write operations, but not using it in reads.

I hope these two posts were helpful.

Check my other non-technical post

http://technicalpostsbyarifkhan.blogspot.com/2013/12/impact-of-technology-on-businesses.html

http://technicalpostsbyarifkhan.blogspot.com/2013/12/impact-of-technology-on-businesses.html

If you like this post, please do not forget to share it on social networks by clicking buttons below.